Demystifying AI: The Power of Explainable Models

Artificial Intelligence (AI) has become an integral part of our daily lives, influencing decisions in finance, healthcare, and more. However, the opacity of AI models has led to concerns about bias, accountability, and trust. This article delves into the importance of Explainable AI Models and their role in ensuring transparency and understanding in the increasingly complex world of artificial intelligence.

To gain a deeper understanding of Explainable AI Models, visit www.misuperweb.net. This platform serves as a resource for staying informed about cutting-edge technologies and their transformative impact on explainable AI.

The Need for Transparency in AI Decision-Making

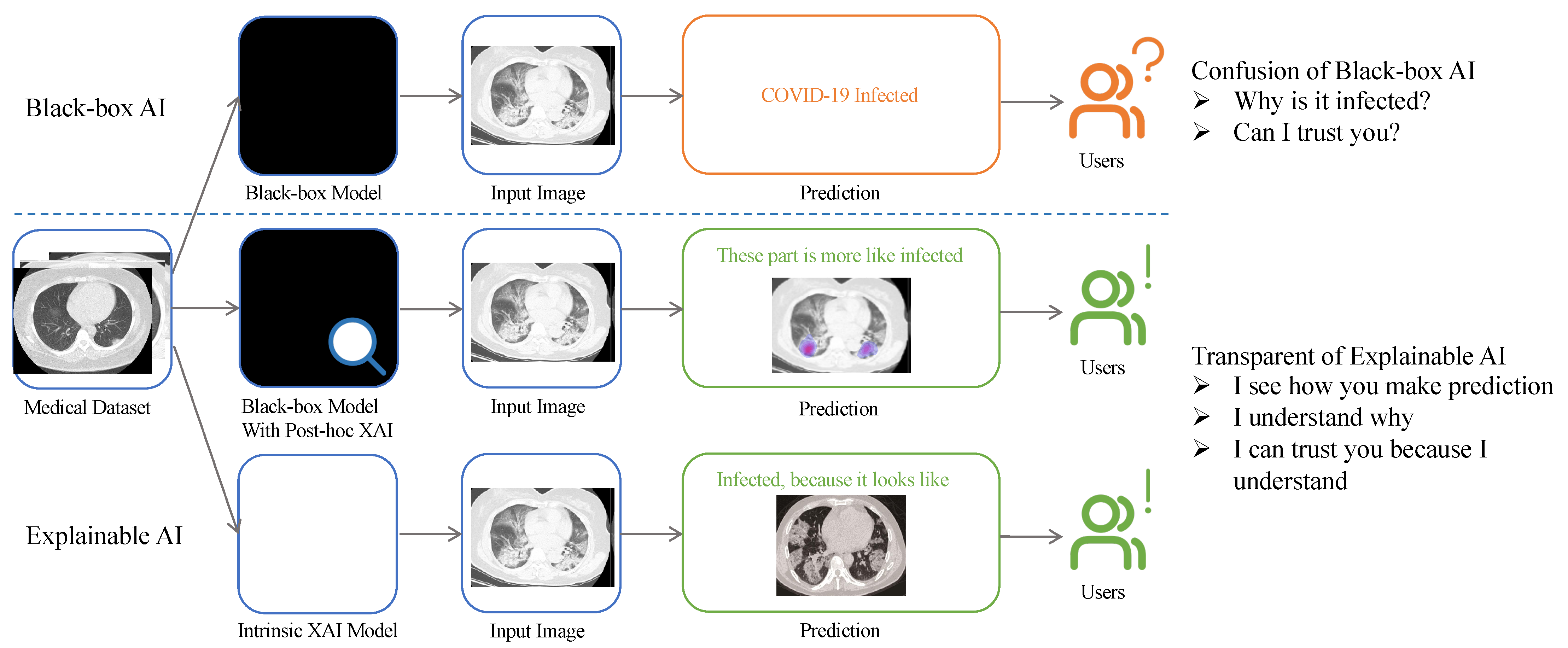

AI algorithms, particularly in machine learning, often operate as black boxes, making it challenging to understand how they arrive at specific decisions. This lack of transparency raises concerns about bias, discrimination, and unintended consequences. Explainable AI Models address these concerns by providing insights into the decision-making process, making AI systems more accountable and trustworthy.

Building Trust through Model Interpretability

Trust is a critical factor in the widespread acceptance and adoption of AI technologies. Explainable AI Models contribute to building trust by offering transparency into the factors influencing decisions. When users, stakeholders, or regulatory bodies can comprehend how AI arrives at conclusions, it fosters confidence in the technology’s reliability and fairness.

Applications in Sensitive Domains: Healthcare and Finance

In domains where the impact of AI decisions is profound, such as healthcare and finance, the need for explainability is paramount. Explainable AI Models in healthcare ensure that medical professionals and patients can understand the rationale behind diagnoses and treatment recommendations. In finance, transparent AI models are essential for regulatory compliance and ensuring fair and unbiased lending practices.

Addressing Bias and Fairness Concerns

Bias in AI algorithms has been a growing concern, reflecting and amplifying societal biases present in training data. Explainable AI Models allow for the identification and mitigation of bias by providing visibility into the features influencing decisions. This transparency enables developers to address bias issues and create fairer, more equitable AI systems.

Legal and Ethical Considerations

As AI becomes more pervasive, legal and ethical considerations surrounding its use intensify. Explainable AI Models align with legal frameworks and ethical guidelines that demand transparency and accountability. This ensures that AI systems operate within regulatory boundaries and adhere to ethical standards, protecting individuals’ rights and privacy.

Balancing Complexity and Interpretability

AI models often operate in complex, high-dimensional spaces, making them challenging to interpret. Striking a balance between the complexity required for optimal performance and the interpretability needed for human understanding is a crucial challenge in AI development. Explainable AI Models provide a means to achieve this balance, allowing for nuanced understanding without sacrificing performance.

User Empowerment and Collaboration with AI

Explainable AI Models empower end-users by making AI systems more accessible and understandable. When users can comprehend the logic behind AI-generated recommendations or decisions, they are more likely to engage with and trust the technology. This transparency facilitates collaboration between humans and AI, creating a symbiotic relationship that leverages the strengths of both.

Innovations in Explainable AI Techniques

The field of explainable AI is dynamic, with ongoing research leading to innovations in interpretability techniques. From feature importance analysis to model-agnostic approaches, researchers are continuously developing methods to enhance the explainability of AI models. Staying informed about these innovations is crucial for practitioners seeking to implement the latest and most effective explainability techniques.

Educational Initiatives: Fostering Understanding of AI Systems

Education plays a pivotal role in demystifying AI and its inner workings. Initiatives focused on explaining AI models to non-experts, such as policymakers, business leaders, and the general public, contribute to a more informed and responsible adoption of AI technologies. These educational efforts create a foundation for responsible AI use across various sectors.

The Future of Explainable AI: Toward Responsible AI Development

The future of AI development is inherently tied to the advancement of Explainable AI Models. As the field evolves, the focus will be on refining existing techniques, exploring novel approaches, and integrating explainability into the entire AI development lifecycle. This trajectory ensures that AI technologies align with ethical principles, legal standards, and societal expectations.

In conclusion, the adoption of Explainable AI Models is pivotal for navigating the complex landscape of artificial intelligence. By demystifying AI decision-making processes, these models contribute to transparency, accountability, and trust. As AI continues to permeate various aspects of our lives, understanding and embracing the principles of explainability are essential for fostering responsible and ethical AI development.