Demystifying Insights: The Significance of Explainable Machine Learning

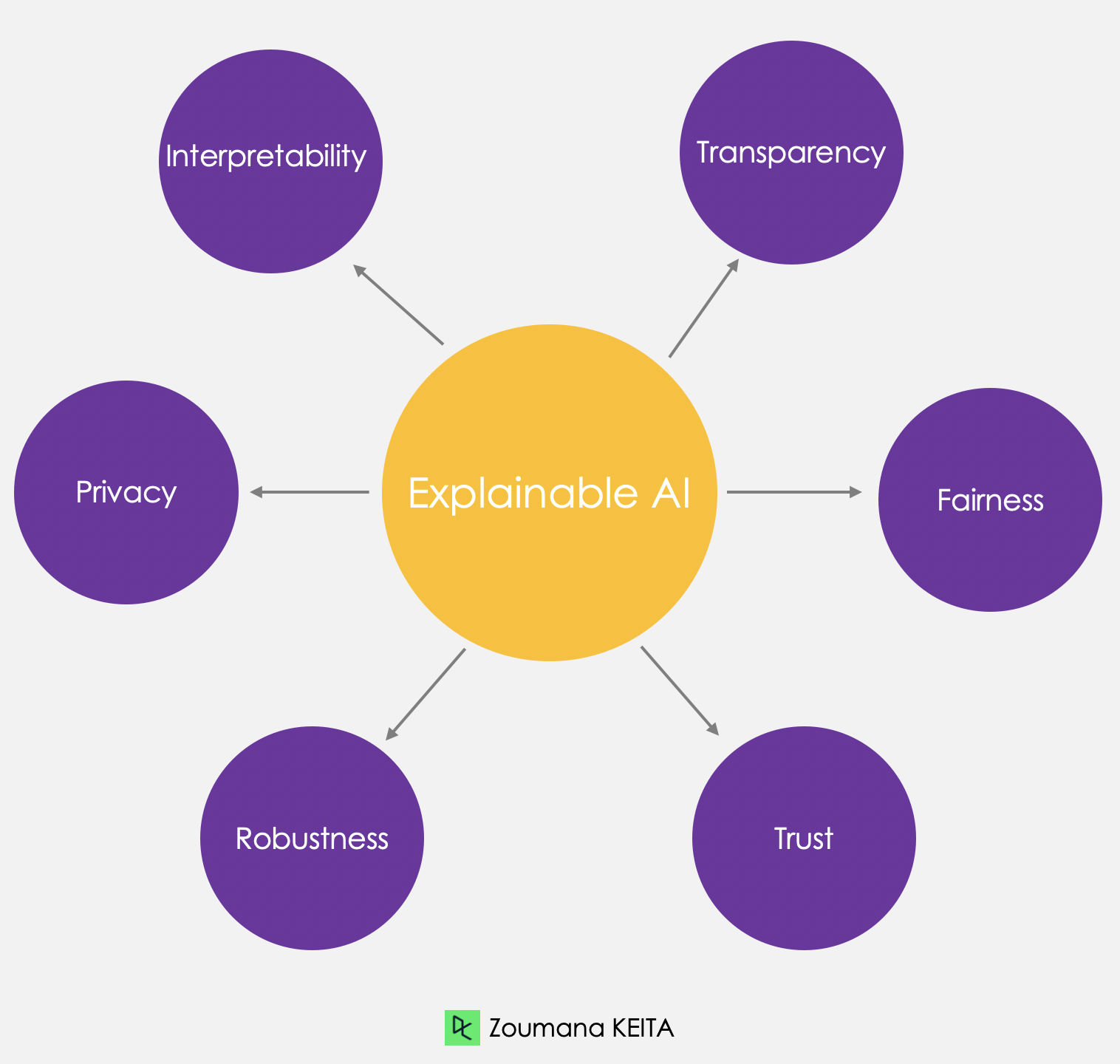

In the realm of machine learning, transparency and interpretability are becoming increasingly vital. Explainable Machine Learning (XAI) is at the forefront of this movement, offering a profound shift from the traditional “black box” nature of algorithms to a more understandable and accountable model.

The Challenge of Black Box Algorithms

Traditional machine learning models often operate as black boxes, making it challenging for users to comprehend the reasoning behind their predictions or decisions. This lack of transparency is a significant obstacle, especially in critical applications such as healthcare, finance, and criminal justice, where understanding the decision-making process is paramount.

Unveiling the Inner Workings

Explainable Machine Learning seeks to unveil the inner workings of these complex algorithms, providing users with insights into how decisions are reached. This transparency not only fosters trust in the technology but also allows stakeholders to validate and verify the model’s outputs, ensuring that decisions align with ethical and regulatory standards.

Enhancing Accountability and Trust

The transparency offered by Explainable Machine Learning enhances accountability. In sectors where high-stakes decisions are made, such as loan approvals or medical diagnoses, understanding why a model reaches a specific conclusion is crucial. XAI ensures that decision-makers can trust the model and that users are not left in the dark about critical choices that impact their lives.

Navigating Regulatory Compliance

As regulations around AI and machine learning become more stringent, compliance with ethical standards and data protection regulations is essential. Explainable Machine Learning aids organizations in navigating these regulatory landscapes by providing a clear and interpretable view of how models use data to make decisions, mitigating risks associated with non-compliance.

Real-world Applications of XAI

To explore real-world applications and advancements in Explainable Machine Learning, visit Explainable Machine Learning for an in-depth look at cutting-edge technologies that bring transparency to AI models.

Building Trust in AI

Building trust in artificial intelligence is a critical aspect of its widespread adoption. By making machine learning processes more understandable, Explainable Machine Learning addresses concerns about bias, fairness, and potential errors. This trust-building element is crucial for integrating AI solutions into various industries and ensuring their acceptance by users.

Addressing Bias and Fairness

One of the key challenges in machine learning is the potential for bias in algorithms. Explainable Machine Learning provides a means to identify and address bias by shedding light on the features and factors influencing the model’s decisions. This transparency enables developers to rectify biased patterns, creating fairer and more inclusive AI systems.

Balancing Complexity and Simplicity

Explainability doesn’t mean sacrificing the complexity and power of machine learning models. It’s about finding the right balance between complexity and simplicity. Explainable Machine Learning techniques offer interpretable insights without compromising the intricate capabilities of advanced algorithms, striking a delicate equilibrium.

The Evolution of Explainable Machine Learning

As technology progresses, so does the field of Explainable Machine Learning. Innovations in model interpretability, visualization techniques, and user-friendly interfaces continue to reshape how we perceive and interact with AI. Staying informed about these advancements is essential for organizations aiming to incorporate the latest in transparent and explainable AI solutions.

Conclusion

In conclusion, Explainable Machine Learning marks a paradigm shift in the world of artificial intelligence. By providing transparency and interpretability, XAI addresses the challenges associated with black box algorithms, fostering trust, accountability, and compliance. As the technology evolves, the integration of Explainable Machine Learning will likely become a standard practice, ensuring that AI systems are not only powerful but also understandable and trustworthy in diverse applications.